‘‘We’re already cyborgs… your phone and your computer are an extension of you’’.

These words surely send shivers through your body as you start pondering over their significance. They make you stumble, tremble and fall out of the sweet equilibrium that has assured you until now that there exists a barrier between the human and nonhuman. However, much less now when this red line seems to become blurrier than ever before. Taking that into consideration, how legitimate can these words truly be? When I first heard Elon Musk discuss the dangers of AI, I could not wrap my head around this very sentence uttered by him. It strikes one as though we have gotten closer to being computational beings than we have thought. Although, at the same time, it makes you question how far are we from reaching the ultimate level of dominance by the transcendent powers of AI.

Now, although we seem to understand some of the dark sides of AI, the realities of this modern technology are gloomier than we can imagine. This form of intelligence has brought about many benefits to our society, yet many of its abilities have led scientists to consider their implications. In fact, it’s been observed how the dexterity of the AI stretches out to far-reaching domains and puts in jeopardy many aspects of the life we have known until now.

Upsides of the use of technological superintelligence

Superintelligence programs have undoubtedly contributed massively to the well-being of our world. By now, various digital tools that have been expanded by AI have proven our dependency on them. Among the programs that have already been integrated, Google Maps best exemplifies the interconnectivity between humans and technological superintelligence. The navigation app, as we all know, offers users the option to choose the best routes to reach the programmed destination. As such, the app is equipped with software that can determine the routes according to the statistical data gathered considering variables such as traffic and distance.

Other devices or features, including online banking, face recognition, media recommendation, smart assistance, or even autonomous vehicles are all integrated into most of our routines, promising to simplify our life with their efficiency. Not to mention the improvements in the sector of public health, where progress has been made on the development of brain-machine interfaces that allow paralytics to control a robotic membrane (for example an arm) to follow basic instructions sent by means of direct control of electrodes into the neural tissue.

Harnessing AI for the sake of social benefits surely promotes a positive image of the capabilities of these machines. However, what the opponents of AI advancement are concerned with is the deliberate (mis)use of the technology, which could bear vastly negative consequences that could bring the human race to extinction, should it fall into the wrong hands or follow a misspecified guideline. This gloomy scenario that many scientists and technology experts predict to be inevitable, given the speed of development and lack of regulatory oversight, is highly disregarded by those who work in the field of AI and promote its numerous advances.

‘’You’re essentially setting up a chess match between the human race and machines that are more intelligent than us and we know by now what happens when we play chess against machines’’

Stuart Russell

Downsides of the use of technological superintelligence

The ramifications of this unprecedented technological progress cover many areas of our lives. Intuitively discernible, one of the most concerning aspects of this evolution is the effect that it would have on the job market. An increase in mass unemployment would be the result of the replacement of skill and efficiency by machines. What Elon Musk suggests as a solution to the detriment to the financial status of people would be the allocation of universal basic income. According to him, the great output of products coupled with the low pricing that will accompany the era of digital transformation will only create a more easily sustainable life. In his view, although these developments would ensure a better living, the real challenge would then become the feeling of purposelessness that people will have to confront. A jobless life would require people to derive meaning and purpose from other sources than their profession, which poses the next big challenge in the post-AI era.

Why is it so unfathomable for scientists to figure out a way out of this unregulated system of technology advancement? What is it that pulls us towards a deliberate use and development of superintelligence technology? Why cannot international institutions and governments adopt a series of measures that counteract the free experimentation of AI?

Internationally, there have ostensibly been and still are efforts being made in the pursuit of bringing AI advancement to a halt and providing a framework of preventative measures. The Geneva Convention makes great strides towards bringing groups of scientists, politicians, and experts from relevant fields together in an attempt to find solutions to the threat posed by the unmonitored progress of superintelligence, among other humanitarian crises. One of the AI’s most renowned pioneers, Stuart Russell, finds that it is not only mass unemployment that threatens the future of humanity in the face of AI advancement but rather the prospect of evolutionary autonomous weapons in the military intervention that pose a much greater threat.

‘‘Being attacked by an army of terminators is a piece of cake compared to being attacked by this kind of [autonomous] weapon’’

stuart russell

Autonomous weapons

Autonomous weapons are, according to the UN definition, ‘‘weapons that locate, select, and engage human targets without human supervision‘‘. As such, “killer robots”, as they are similarly called, have no evil intent, reason, or empathy as they are programmed to reach their target and eliminate it. These robots could, for example, be wired to target a certain group of people possessing specific physical traits in a certain country and produce no collateral damage when exterminating the target group. This does sound like sci-fi but considering wars around the world, it has been argued that this weaponry has already been put into practice. Moreover, they are as realistic as they can get, considering that they are already being advertised online.

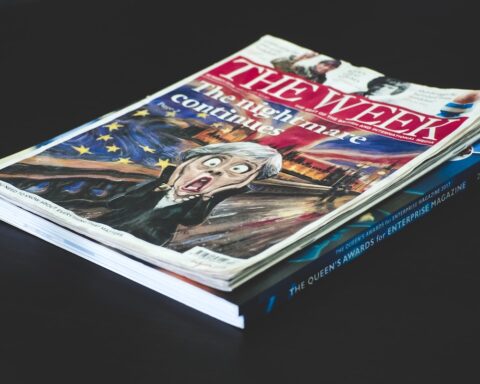

Yuval Noah Harari, a historian, and writer, recently discussed the evolution and repercussions of the Russian invasion of Ukraine in a conversation with one of the TEDTalk curators. The effects that result from this unfolding disaster have been felt and continue to reverberate through many countries on the face of the planet. In Europe, it is currently Ukraine that bears the greatest challenges of mass destruction, yet internationally everyone will become a victim of this war and as such face the magnitude of the crystallization of this event’s effects.

In light of the invasion, many of the world’s nations have been prompted to alter the allocation of the budget on national defense. This move initiated by Germany continued to be duplicated in many neighboring and far-distanced countries such as France, Romania, and China. This proves, as Harari explains, how we ended an era of peaceful living under the conditions in which most national budgets dedicated great percentages of their money to important sectors such as health care, education, and climate change. Now we are moving towards a place in time where financial support previously reserved for vital sectors that condition the well-being of citizens in terms of education, health, and environment would be spent on military artillery.

‘‘The money that should go to health care, that should go to education, that should go to fight climate change, this money will now go to tanks, to missiles, to fighting wars.’’

Yuval Noah Harari

The implications this has on the advancement of AI reach epic proportions – many leaders now consider national defense a priority and artificial intelligence is a great asset in military defense and attack. As such, what recently started to gain importance regarding the worrisome nature of AI and its regulation has now reached a point where the urgency for supervision of advancement might come to a halt, and developments in the field could increase exponentially.

How did we get here?

This military invasion forces upon an attitude that urges global nations to join the race towards the development of lethal autonomous weapons and defense technology. Sam Harris talks more about this scenario in his TEDTalk where he explains that if Silicon Valley were deploying a super-intelligent AI other world powers such as China or Russia would inevitably push for the development of a substantially more intelligent technological weapon that could help them win the race towards domination or protection. Unfortunately, this scenario is far from being in the distant future. Currently, we are dealing with hesitation to take responsibility for an internationally monitored oversight in the field. Russia, the U.S., and Israel are some of the actors in the political arena that have expressed their wish to refrain from enacting a legally binding framework that would prevent these weapons.

What’s worse is that AI does not fall under the category of the world’s most pressing issues for the public at the moment. More precisely, it is not even a scary thought that frightens most people. As Sam Harris puts it, in contrast to other threatening causes for human extinction, such as global pandemics, nuclear wars, poverty, and malnutrition caused by global warming, the AI domination of humans constitutes a topic of science fiction in our culture. It represents an idea worthy of being depicted by movie franchises in a dramatic manner, thus illustrating the entertaining element it has become in our lives. And what complements our delayed consideration of this issue is that certain computer scientists reassure us that there is still a lot of time that needs to pass before we can even begin to consider the dangers of AI, that ‘‘we’re still in the infancy of AI”.

Most organizations, such as the Association for Advancing Automation, post on their website articles and pieces of information that contradict the ‘‘misconceptions’’ that opponents of AI bring forward. In contrast, Stuart illustrates the rapidity with which advances in science can proceed by using an example from the past. He explains how, in 1933, following the assertion by Ernest Rutherford that we cannot extract energy from atoms, the scientist Leo Szilard achieved, only one day later, what was until then completely inconceivable. He discovered the nuclear chain reaction which within a few months led to the invention of the first nuclear bomb. Thereby, Stuart contradicts the assertion that science is slow-paced, and promotes rethinking the urgency with which we must act.

Measures taken

Many experts and scientists have created lists of measures that could be taken to prevent further AI advancement but also solutions to a scenario where these advancements could not be banned. Spokespersons in the technology industry, such as Elon Musk, would propose the coupling of a high bandwidth interface to the brain in order to help achieve a symbiosis between human and machine intelligence, which according to him would help solve the problems of control and usefulness.

A brain-computer interface is essentially a computer-based system that operates on the basis of brain signals that are transmitted and analyzed by the machine which then sends these commands to an output device that carries out the action. Currently, the speed of human-brain interactions with other humans cannot be compared to the ability of computer-based systems that process information at a higher rate. In other words, since we cannot avert superintelligence, we must reach a level of human intelligence output that could defeat the powers of technological AI.

Other computational scientists, such as Russell, propose an AI ‘‘Kill Switch’’ which is meant to shut down the mission of the robot should anything start to run amok and eventually become threatening to humanity. A reason for that could be the misspecified objective given to the AI, which should not operate aimlessly, but be given specifically relevant missions.

Russell further postulates that another answer to this tech progress is building oracles. These are machines designed to provide answers to questions that override the human capacities of intelligence and cannot modify the world outside its limited environment. Essentially, he proposes a change to the human-machine relationship – us using AI to become more intelligent and aware of our surroundings. As a result, the technology would provide us with answers that might solve some of the world’s most pressing issues such as fighting climate change, eliminating poverty and diseases, and many more.

But, most importantly, the need remains to call for an international effort to ensure regulatory oversight, an initiative that, in the face of recent developments in Eastern Europe, would not pose threat to scientific practices but lay the foundation for a fair and peaceful world.